Building AI-Ready Data

The Preparation and Real Work Before the Modelling

We often think that AI breakthroughs mean advanced algorithms and complex model architectures. However, the real secret to AI success isn’t just the model; it’s the data behind it. In fact, advanced AI models are just the “tip of the iceberg,” and 90% of success lies in data foundations.

In other words, AI initiatives mainly depend on the quality and readiness of data. As one expert put it, “AI models are only as good as the data they’re trained on,” because bad data leads to unreliable predictions.

Without AI-ready data, even the best algorithms will stumble.

We can think of them as a pyramid of needs for AI, where the base layers are the foundation, including data collection, cleaning, and organization, which must be solid before the pinnacle (the AI model) can work best.

So, how could we build AI-ready data? We will explore them in the next section.

Sponsored Section

The 2025 data stack is incomplete without a semantic layer.

It’s what makes data trustworthy, analytics consistent, and GenAI enterprise-ready.

Join AtScale and GigaOm for an exclusive look at the 2025 Semantic Layer Radar Report, and learn how to evaluate vendors, align architecture with business impact, and prepare your AI strategy.

📅 Wednesday, October 29, 2025 | 2:00 PM ET | 60 minutes

🎙️Dave Mariani, Co-founder & CTO, AtScale

🎙️Andrew Brust, Analyst, GigaOm

You’ll discover:

Which semantic layer vendors lead the 2025 landscape

How to integrate GenAI + LLMs with governed data

Proven frameworks for scaling and governance

How to measure ROI and business impact

Reserve your FREE spot here👇👇👇

Don’t miss it!

What “AI-Ready” Data Really Means

We hear the term “AI-ready data” frequently, but what does it mean?

In simple terms, AI-ready data is information set up so that AI systems can easily use it and generate reliable results. While nearly every company today has plenty of data, not all data is suitable for AI applications.

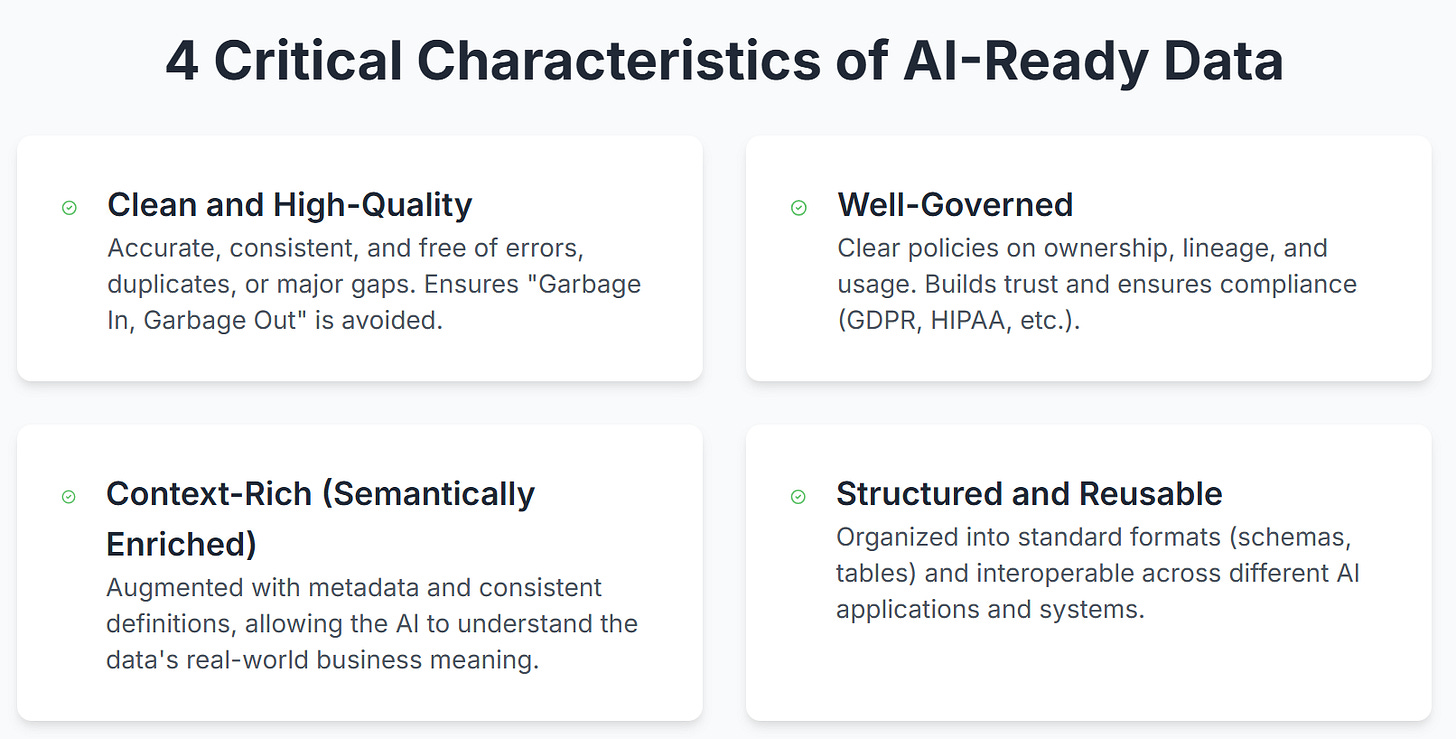

AI-ready data has several defining characteristics:

Clean and High-Quality: It’s accurate, consistent, and free of major errors or gaps. You won’t find the AI stumbling over duplicates, missing data, or typos. Garbage in, garbage out is the principle.

Well-Governed: There are transparent processes and rules about it: who owns it, where it came from, and how it’s allowed to be used. Governance builds trust in the data by ensuring issues like lineage, privacy, and compliance are handled.

Context-Rich (Semantically Enriched): AI-ready data isn’t just raw figures with confusing column names. It’s enhanced with context and meaning through metadata, consistent definitions, or a semantic layer, enabling AI to understand what the data represents in real business terms. For example, an AI can recognize that “Apple” refers to the tech company rather than the fruit if the data is enriched with that context.

Structured and Reusable: The data is organized in a clear format (tables, categories, labeled text/images, etc.) and standardized so it can be easily combined or reused across different AI applications. It’s not stored in isolated silos or random spreadsheets; it’s accessible in a way that many tools or models can work with.

Everything needed for AI technologies must be built on a foundation of clean, well-governed, and context-rich data.

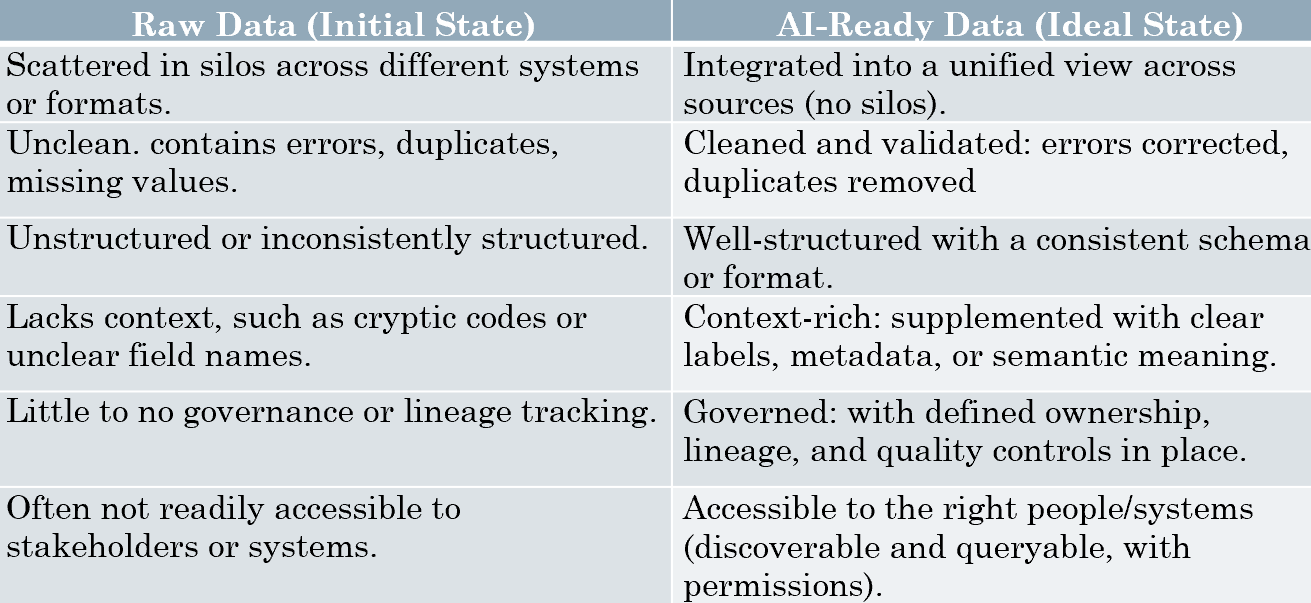

One useful way to grasp the concept is to compare raw data (the starting point) with AI-ready data (the goal). The table below highlights some key differences:

Key Steps to Get Data AI-Ready

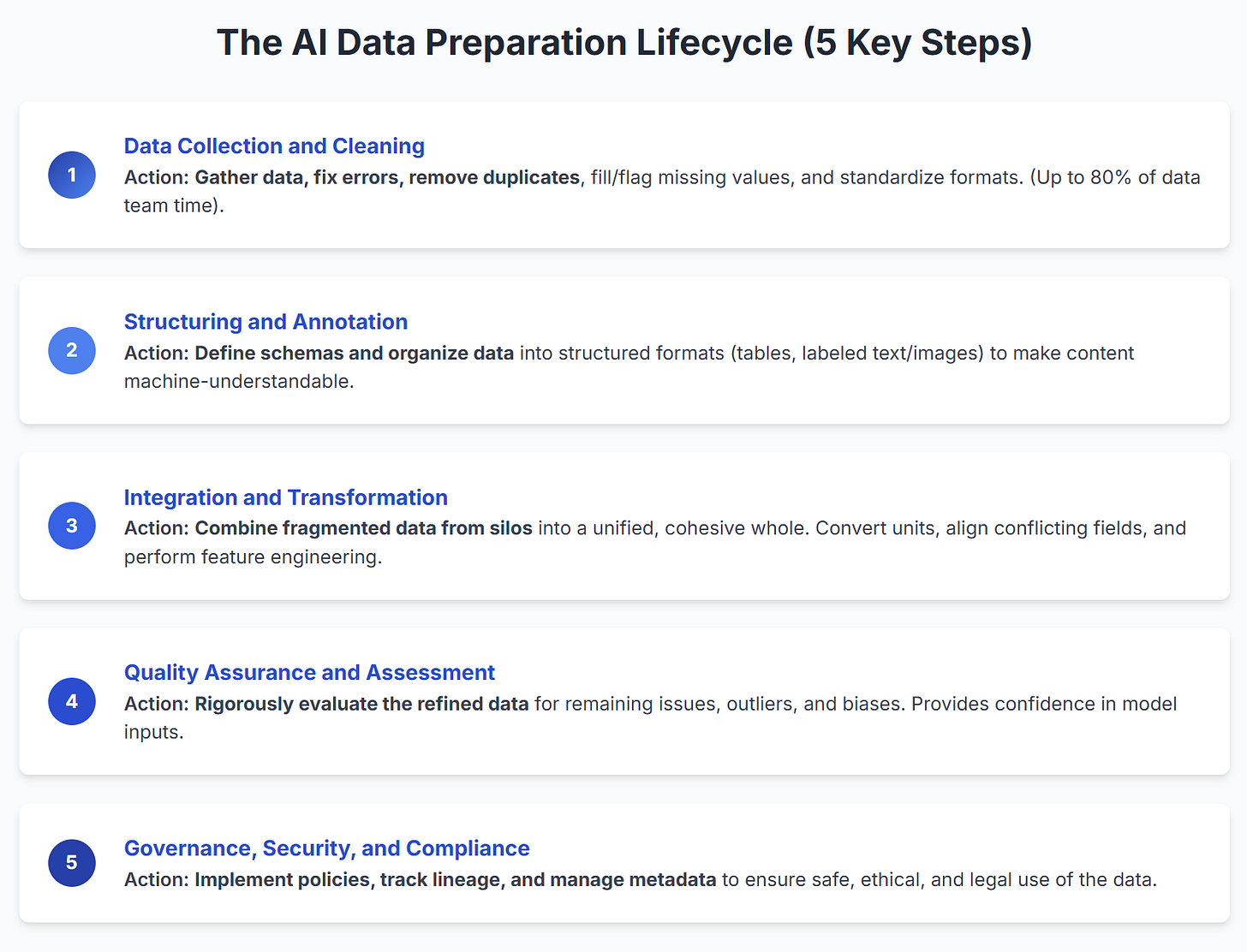

Transforming raw data into AI-ready data requires multiple layers of work. It’s helpful to think of this as a data preparation lifecycle or stack, where each layer adds more readiness.

Below are the key steps (or layers) involved in making data AI-ready:

Data Collection and Cleaning: First, gather data from reliable sources and clean it by fixing errors, filling or flagging missing values, removing duplicates, and standardizing formats like dates and units for consistency. This step is crucial as surveys indicate data teams spend up to 80% of their time on cleaning since models can’t learn from flawed data.

Data Structuring and Annotation: Next, organize data into a structured format with labels or annotations for machine understanding. For example, tag text documents with topics, annotate images with objects, and organize numerical data into tables. Structuring also involves defining schemas to avoid messiness. Labeling features helps AI focus.

Integration and Transformation: Here, we combine and transform data from various sources into a unified dataset by breaking down the data and merging records from multiple sources. Transformation also includes activities such as standardizing standard units, encoding, or creating new features. The goal is to give AI a complete dataset.

Quality Assurance and Assessment: Just like software needs testing, data requires QA. This step involves assessing data quality and fixing issues. Teams use profiling tools or AI assessments to verify values, outliers, biases, or missing key fields. It’s a feedback loop: identify gaps, then improve the data. Proper QA ensures the data used by the model is accurate and unbiased.

Governance, Security, and Compliance: Implement strong data governance practices throughout, especially at the final stage. Establish policies for data access, usage, and compliance with regulations like GDPR or HIPAA. Track data lineage to understand its history and manage metadata. Governance ensures high-quality, well-documented data used ethically and legally by people or AI.

(Optional) Semantic Alignment: Many organizations add a semantic layer or intelligent data model on top of cleaned and integrated data, mapping business concepts and relationships consistently. This layer ensures uniform definitions of terms like “churn rate” or “active user” across teams and datasets. While not essential for being “AI-ready,” it improves data reuse by making data self-descriptive, helping AI models understand data fields.

Each of these steps adds a layer of preparation to the data. By the end of this process, we’ve transformed raw data into a refined dataset that an AI model can easily learn from. It’s important to remember that this is not a one-time task but an ongoing, iterative process.

The AI-Ready Data Tooling Example

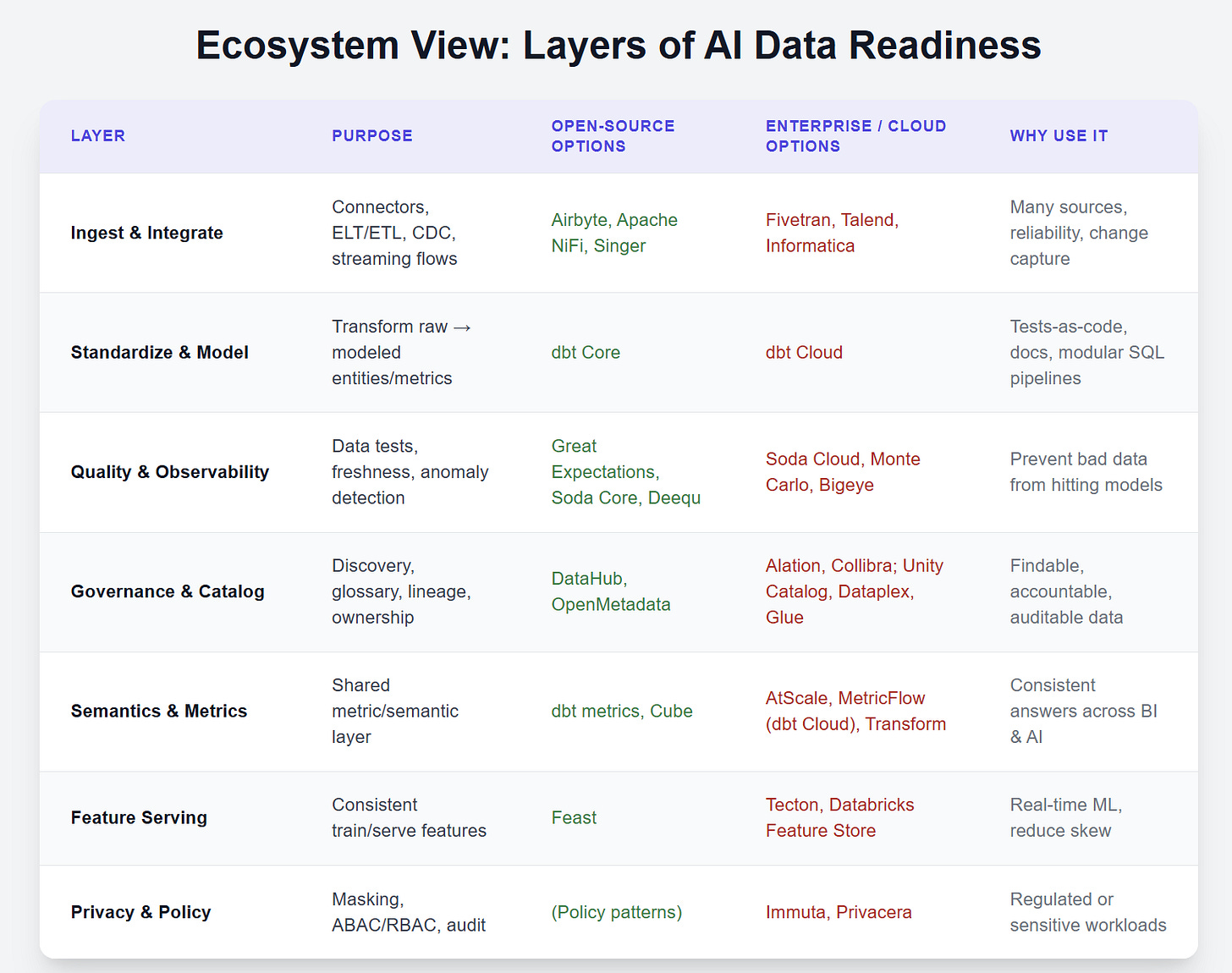

Great models need significant inputs. Think in layers, pick one tool per layer to solve a real bottleneck, then expand as needs grow.

Here are a few tool examples you can reference:

Conclusion

AI success relies more on solid data foundations than on flashy models: without AI-ready data that is high-quality, well-governed, enriched with metadata, and structured for reuse, the algorithms cannot succeed.

Achieving this requires an iterative lifecycle: collect, clean, structure, annotate, and integrate data; run quality checks; and enforce governance and security, with optional semantic alignment.

Use one fit-for-purpose tool per layer to address bottlenecks, expanding as necessary. Investing in this foundation enhances model reliability, explanation, and scalability across the business.

I hope it has helped!

Like this article? Don’t forget to comment and share!