Data Versioning Workflow With DVC - NBD Lite #16

Important feature for your data science project.

If you are interested in more audio explanations, you can listen to the article in the AI-Generated Podcast by NotebookLM!👇👇👇

The machine Learning project is continuous and should be if the business wants to get the values.

It means that we need to keep updating our model to stay relevant.

However, not all updates always mean a better model. There are times when we want to go back into our previous iteration. This is where versioning comes to help.

When you think about versioning tools, it’s often all about Git.

However, one of the open-source versioning tools for machine learning projects is DVC.

So, how could DVC help data scientists? Let’s take a look at how it can version our dataset!

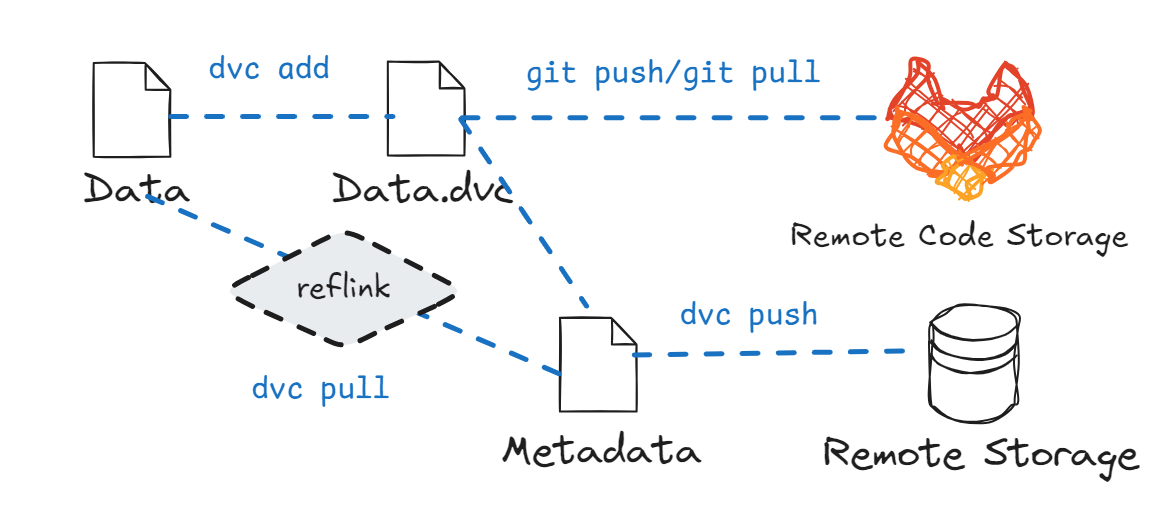

Here is a simple summary of how things work.

DVC For Data Versioning

DVC stands for Data Version Control, the Git for Machine Learning project. It’s a tool that allows us to track changes in our ML project, especially large files.

It works to complement Git, not replace it.

Let’s try out the data versioning process. It would look something like below.

Setting up DVC

We would need to install the DVC initially.

pip install dvcIf you want to use them with Visual Studio Code, you can install them from their marketplace.

For this example, I would use the email spam data from Kaggle for the sample dataset.

As DVC complements Git, we must initiate Git before using the DVC.

git initThen, we can start creating a DVC project within our directory.

dvc addAdding Data to DVC

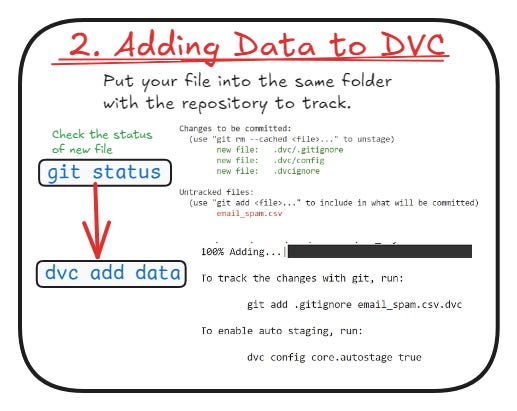

When we create the DVC project, a few files will be created. We can check them out.

git statusWe can start tracking the data with DVC.

dvc add email_spam.csvThe code above specified which data we wanted to track and the result in creating the dataset metadata.

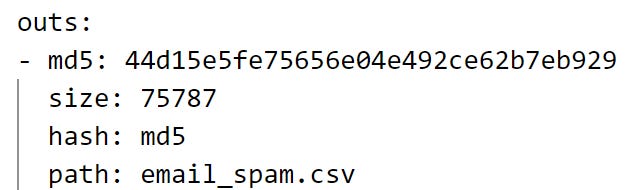

If you check your folder, you will find the DVC file contains information similar to the image below.

This is the cached metadata for your dataset using DVC, and this is how DVC could track even a large dataset.

Version Metadata

What we do is to version our metadata while ignoring the actual dataset.

git add email_spam.csv.dvc .gitignore

git commit -m "Add raw data"As data can be big, what we version is the metadata file instead of the actual dataset.

The DVC file containing metadata looks like below.

Push Data to Remote

The actual dataset would need to be stored somewhere.

To do that, we need to set the remote storage linked to our local storage.

dvc remote add -d myremote s3://mybucket/dvcstoreThis remote can use a cloud system such as S3 and Google Drive. It can even work with the local storage.

If all is going well, you can push them now to track the data.

dvc pushPull Data

Once the data has been tracked remotely, we can download it somewhere else via the pull command.

You can move the work elsewhere and pull the data if the remote is correct.

dvc pullYou can also change the data you have and then push it back into the repository.

With versioning, you can come back to the version you want.

git checkout <commit_hash>

dvc checkoutThat’s all for today! I hope this helps you understand how simple it is to use DVC for data versioning.

Are there any more things you would love to discuss? Let’s talk about it together!

👇👇👇

Previous NBD Lite Series

Don’t forget to share Non-Brand Data with your colleagues and friends if you find it useful!