Develop LLM-based Application via Multiple Agents with Microsoft AutoGen

Utilize the power of agents to help your development works

Large Language Model (LLM) utilization has become increasingly diverse daily, especially with implementing agents. By adapting LLM, researchers have built agents capable of reasoning, which can converse, use tools, and adapt to feedback.

Recently, the agent’s research has delved further into the multi-agent approach, which allows multiple agents to work together. I am personally fascinated with these agent’s actions for reasoning with each other to achieve their goals.

Slowly but surely, agents’ research would provide a basis for many things we did. This includes code and application development, where few researchers have tried to automate the development with agents. One of them is the AutoGen research by Microsoft researchers.

I love the progress of agent research, and I am excited to write more about AutoGen as I believe so many implementations could come from this research.

So, what is AutoGen? and how does it work? Let’s explore further.

AutoGen

As mentioned above, AutoGen is an agent research that provides users with a multi-agent approach to building LLM-based applications. However, AutoGen frameworks provide two different advantages to use:

Customizable and conversable agents,

Conversation programming.

Let’s break down a little bit for each advantage.

First, customizable and conversable agents mean that the agents from AutoGen are tweakable, and we can create a specific role for each agent (e.g., agents for executing code, validating output, providing feedback, etc.). The conversable agent also means that the agent can receive, interact, and act based on the message given by another agent or human input.

Second, conversation programming means agents can develop LLM-based applications using a multi-agent approach. AutoGen streamlines the process by defining a set of conversable agents with specific capabilities and roles and programming the interaction behavior between agents.

The overall AutoGen can be seen in the image below.

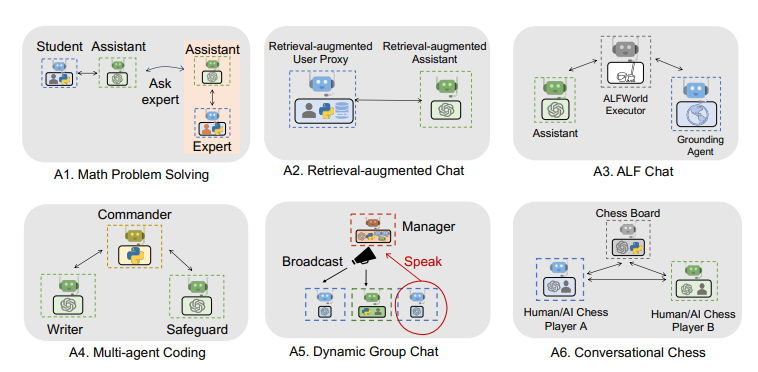

Wu et al. (2023) provide examples of AutoGen use cases to showcase their research capability in their paper.

With diverse implementations such as Math problem solving to Conversational Chess, AutoGen showed that we can play around with the agents and their interaction to achieve our goal.

That’s the elementary introduction of AutoGen. Let’s try out the framework to understand better what AutoGen can provide.

Develop a Simple Solution with AutoGen

For this example, we would try to ask AutoGen to come up with a solution for finding the latest paper about Multimodal LLM in the Arxiv and come up with any potential applications. The implementation takes inspiration from the AutoGen code example.

First, we need to install the AutoGen package. I suggest creating a virtual environment for this tutorial, as you don’t want to mess with your base. We can install the AutoGen with the following code.

pip install pyautogenIn the next section, we would set up the configuration and agents for them to have conversations. In the code below, we set up the model we want the agents to use as a reasoning tool, which is the OpenAI GPT model.

import autogen

config_list = [

{

'model': 'gpt-4',

'api_key': 'YOUR API KEY',

},

{

'model': 'gpt-3.5-turbo',

'api_key': 'YOUR API KEY',

},

{

'model': 'gpt-3.5-turbo-16k',

'api_key': 'YOUR API KEY',

},

]The above code sets up the GPT model that the agents can use. Next, we can set up the model parameter with the following code.

llm_config = {"config_list": config_list, "seed": 42, "temperature": 0.5}Any parameter that the model can accept is valid in the above code. Following that, we would set the conservable agents for interaction. There are two kinds subclasses of agent from conservable agents: User Proxy Agent and Assistant Agent.

The User Proxy Agent act as a proxy for the user to execute any code and provide feedback to the agents. This agent able to get any of the human input or we can direct them to provide answer in behalf of human.

In contrast, Assistant Agent is designed to solve the task with the given LLM. The agent act as the technical person that would provide the solution.

Let’s start by initiate our User Proxy Agent. In the following code, we would set the agent as a human admin that would manage the result.

user_proxy = autogen.UserProxyAgent(

#Name of the User Proxt Agent

name="User_proxy",

#The role we set for this agent

system_message="A human admin.",

#Configuration for the code execution. The agent would only see the last two chat and save the code in the groupchat folder

code_execution_config={"last_n_messages": 2, "work_dir": "groupchat"},

# Human input mode Terminate means that agent only prompts for human input only when a termination message is received or the number of auto reply reaches the max_consecutive_auto_reply

human_input_mode="TERMINATE"

)Next, we would set the Assistant Agent as well. In the following code, we would create two differents agent that act as the coder and the product manager.

coder = autogen.AssistantAgent(

name="Coder",

llm_config=llm_config,

)

pm = autogen.AssistantAgent(

name="Product_manager",

system_message="Creative in software product ideas.",

llm_config=llm_config,

)With all the agents is ready, we set the whole agents to interact with each other in the group chat with the following code.

groupchat = autogen.GroupChat(agents=[user_proxy, coder, pm], messages=[], max_round=12)

manager = autogen.GroupChatManager(groupchat=groupchat, llm_config=llm_config)Now we would ask the agents to interact with each other to solve the intended problem.

user_proxy.initiate_chat(manager, message="Find a latest paper about Multimodal LLM on arxiv and find its potential applications in software.")

# type exit to terminate the chatYou would then see the whole agents activity in your terminal or IDE. For example, here few last messages of the chat.

The product manager seems having hard time to find the paper because of the technical restriction but the agent seems able to provide feedback. Then, if you see the working directory, you would able to see the code used for solving the given problem.

We can validate the output code if you want to make sure the result given is correct.

There are many more example implementation from AutoGen that you can access from their webpage. I would try to give further tutorial later for an advance usage of the AutoGen.

Conclusion

AutoGen is a multi-agent framework from Microsoft researchers that help user develop solution to the given problem. What make AutoGen more advance is that it allow the users to tweak the agents and their conversation to achieve the goal.

I hope it helps.

Thank you, everyone, for subscribing to my newsletter. If you have something you want me to write or discuss, please comment or directly message me through my social media!