Fine-Tuning vs. RAG – Which Reigns Supreme?

Comparison between the two techniques

Artificial Intelligence, or AI, has become integral to our daily work. Products such as ChatGPT are leading the trend and have pushed more similar applications to become popular.

Given their popularity, language models are starting to play a key role in various applications—from customer support to content generation. As these models evolve, two common approaches for adapting them to specific needs emerge: fine-tuning and Retrieval-Augmented Generation (RAG).

Both techniques have become standards for adapting our model to perform particular use cases. This article will explore both techniques, compare their technical foundations, and discuss when to use which.

If you are curious about them, let’s explore them together! In the end, don’t miss the learning materials for Fine-Tuning and RAG. + Infographic for your learning.

Background Concepts

Understanding how AI models are customized will require us to understand the differences between fine-tuning and RAG techniques.

In this section, we’ll explore each approach concept to build your understanding regarding each technique.

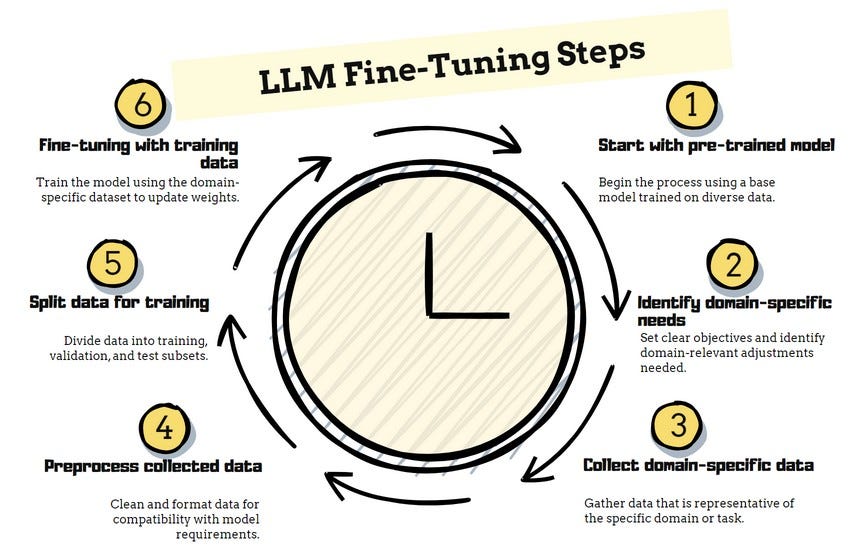

1. Fine-Tuning Technique

Fine-tuning is the process of taking a pre-trained language model and further training it on a domain-specific dataset.