LLMs Transfer Learning with a Few Line of Codes

Learn transfer learning with these simple Python code

In our previous newsletter, we learned about what transfer learning is and the advantage of implementing transfer learning. If you miss them, you can always visit the newsletter here.

In the next section, we will learn how to use Python code for LLM transfer learning. As the Large Language Model already takes over the world, I think it’s important to learn more about them.

In this code example, we would use the HuggingFace Transformers Python packages to do transfer learning. We can use the following code if you haven’t installed the package.

pip install transformers

pip install torchThere are many pre-trained models provided by the Transformers, which you can see on the website. For this example, we would use the BERT. Currently, it is one of the staple models for many LLM activities.

import torch

from torch.utils.data import DataLoader, TensorDataset

from transformers import BertTokenizer, BertForSequenceClassification, AdamW

# Load pre-trained BERT model and tokenizer

model_name = "bert-base-uncased"

tokenizer = BertTokenizer.from_pretrained(model_name)

#The num_labels parameter is change following your case

model = BertForSequenceClassification.from_pretrained(model_name, num_labels=2) After the model is there, let’s prepare our dataset. We would use example data here, but you should change them to your own dataset later. In our case, we would assume the model activity is to do sentiment analysis (separate between negative and positive).

# Tokenize and preprocess your dataset (replace this with your actual dataset)

texts = ["This is a positive example.", "This is a negative example."]

labels = [1, 0] # 1 for positive, 0 for negative

encodings = tokenizer(texts, padding=True, truncation=True, return_tensors="pt")

input_ids, attention_mask = encodings["input_ids"], encodings["attention_mask"]

label_tensor = torch.tensor(labels)

# Create a DataLoader for your dataset

dataset = TensorDataset(input_ids, attention_mask, label_tensor)

dataloader = DataLoader(dataset, batch_size=8)With all the data ready, let’s initiate the transfer learning.

# Set up the optimizer. Try to experiments with the parameter

optimizer = AdamW(model.parameters(), lr=2e-5)

# Fine-tuning loop

num_epochs = 3

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

for epoch in range(num_epochs):

model.train()

for batch in dataloader:

input_ids, attention_mask, labels = [x.to(device) for x in batch]

outputs = model(input_ids, attention_mask=attention_mask, labels=labels)

loss = outputs.loss

loss.backward()

optimizer.step()

optimizer.zero_grad()

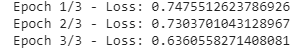

print(f"Epoch {epoch + 1}/{num_epochs} - Loss: {loss.item()}")The result can be seen in the image above. If you are satisfied with the result, you can save the model for future usage.

# Save the fine-tuned model

output_dir = "./fine_tuned_bert"

model.save_pretrained(output_dir)

tokenizer.save_pretrained(output_dir)If you want to use the model in another environment, use the following code.

# Load the fine-tuned model and tokenizer

from transformers import BertTokenizer, BertForSequenceClassification

model_name = "./fine_tuned_bert"

tokenizer = BertTokenizer.from_pretrained(model_name)

model = BertForSequenceClassification.from_pretrained(model_name)That is all how you can do your own Transfer Learning. I hope it helps!

How do we prepare our own data sets? How should they enter the transfer model? thank you