NBD Lite #5: Implementing Precision-Recall Curve Analysis for Evaluating Imbalanced Classifiers

Simple analysis yet powerful for many real-world cases

An imbalance classifier is a model that handles classification cases where the output label is not equally distributed.

Imbalance cases have varying degrees depending on how loop-sided the class distribution is, but the classifiers often had a hard time the more severe the imbalance cases were.

Why does the classifier often have a hard time with imbalance cases?

We need to remember that machine learning algorithms study data patterns. In imbalance cases, the classifier has much more limited learning material for the minority classes, which leads to a bias toward the majority.

Bias also leads to misleading higher accuracy metrics.

For example, let’s look at how we calculate accuracy and sample confusion matrix for imbalance classifier prediction.

If we calculate using the confusion matrix above, the Accuracy would be:

(900 + 20)/(900 + 20 + 50 + 30) = 0.92

The accuracy would be close to 100% as the prediction “supposedly” can predict correctly almost every time.

However, further inspection would break the illusion of a great model.

If we take the example data above as fraud cases, the actual data has 950 cases where the fraud didn’t happen and 50 cases where it was fraudulent.

The model above might have 92% accuracy, but it blatantly mispredicts 30 actual fraud cases and accuses 50 fraud cases.

In a real-world case, it’s very bad. You don’t want to miss so many fraud cases and risk the company's reputation by wrongly predicting someone doing fraud.

But that’s what happens to a classifier that only focuses on accuracy. Just keep predicting the majority, and the accuracy metrics will be higher.

This is where we can use other metrics for imbalance cases: Precision and Recall.

Semantically, we can say:

Precision: "Of all the positive predictions made, how many are actually positive?”

Recall: “Of all the actual positives, how many were correctly predicted?”

But I like to think more practically. Precision uses False Positive (FP), and Recall uses False Negative (FN) in its calculation.

If you want to minimize FP, you should focus on Precision. In contrast, you should focus on Recall if you want to minimize FN.

Do FP cases do more harm? Or is FN way more costly? It would come back to your use cases.

Well, most people would opt to have both high Precision and Recall. If you want to have a harmonic mean of both metrics, there is a metric such as the F1 score, but I would like to see it go further than the F1.

The precision-recall curve is an analysis that shows the tradeoff between precision and recall metrics for different thresholds (decision boundary).

Let’s see an example of the Precision-Recall curve:

A high area under the curve means the classifier's recall and precision are high with respect to the positive class.

We can say that a high AUC-PR score means that the classifier returns accurate results (high precision) and mostly positive results (high recall).

AUC-PR becomes beneficial in the case of imbalance as the curve emphasizes the positive class, which is often the class of primary interest in an imbalance case.

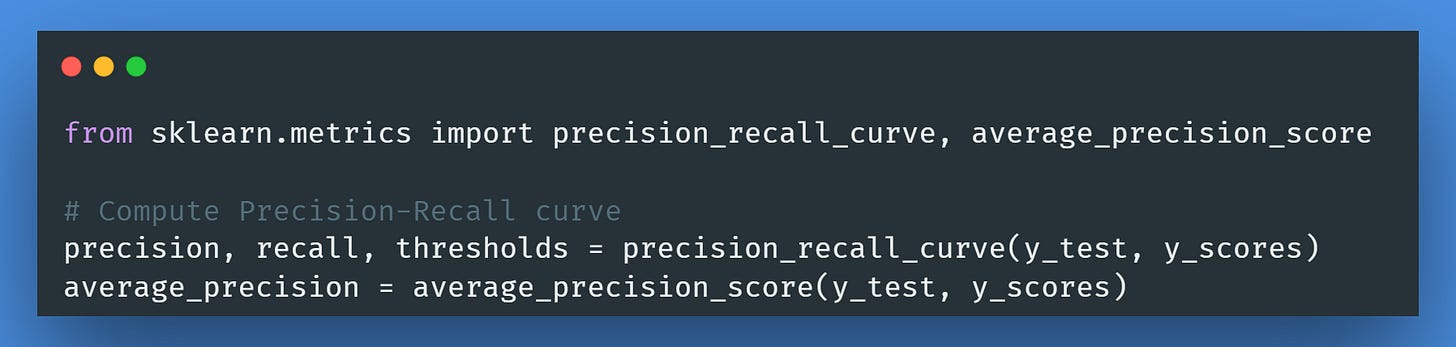

In Scikit-Learn Python, you can implement the Precision-Recall curve and AUC-PR with the following code.

So, to better understand your binary classifier model's performance in imbalance cases, try using a precision-recall curve.

Just remember that a higher AUC-PR value indicates a better model. The maximum value is 1 (perfect precision and recall).

FREE Learning Material for you❤️

👉5 SMOTE Techniques for Oversampling your Imbalance Data

👉Data Science Learning Material

That’s all for today! I hope this post helps you better understand handling imbalance cases.

Are there any metrics you would like to use in the imbalance cases? Let’s discuss it together!

👇👇👇