Python Packages for Feature Engineering - NBD Lite #34

Know these packages to improve your data workflow

Feature engineering is the process of creating new features from the existing data.

Whether we simply added two columns or combined more than a thousand features, the process is already considered feature engineering.

Feature engineering is essential to the data workflow because the activity could massively improve our project performance.

For example, empirical analysis by Heaton (2020) has shown that feature engineering improves various machine learning model performances.

To help the feature engineering process, this article will go through my top Python package for feature engineering.

Let’s get into it!

1. Featuretools

Featuretools is an open-source Python package that automates the feature engineering process developed by Alteryx.

It’s a package designed for deep feature creation from any feature we have, especially temporal and relational features.

Deep Feature Synthesis (DFS) is the heart of Featuretools activity as it allows us to acquire new features from our data quickly.

How to perform it? Let’s use the example dataset from Featuretools to do it. First, we need to install the package.

pip install featuretoolsNext, I would load the toy dataset that had already come from the package to perform Deep Feature Synthesis.

import featuretools as ft#Loading the mock data

data = ft.demo.load_mock_customer()cust_df = data["customers"]

session_df = data["sessions"]

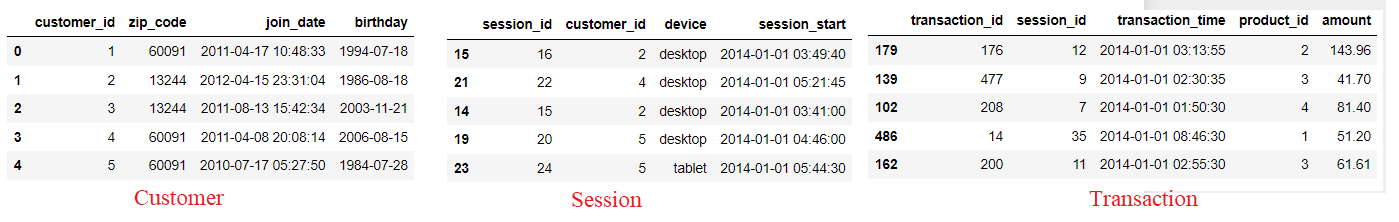

transaction_df = data["transactions"]In the above dataset, we have three different connected datasets:

The customer table (unique customer)

The session table (unique session for the customer)

The transaction table (session transaction activity)

In some way, all the datasets were connected with their respective key. To use the Featuretools DFS, we need to specify the table name and the primary key with the dictionary object (If there is the DateTime feature, we also add it as the key).

dataframes = {

"customers": (cust_df, "customer_id"),

"sessions": (session_df, "session_id", "session_start"),

"transactions": (transaction_df, "transaction_id", "transaction_time"),

}Then, we need to specify the relationship between the tables as well. This is important because DFS would rely on this relationship to create the features.

relationships = [

("sessions", "session_id", "transactions", "session_id"),

("customers", "customer_id", "sessions", "customer_id"),

]Finally, we could initiate the DFS process. To do that, we could run the following code. What is important is the target_dataframe_name parameter needs to be specified for the resulting level you want. For example, this code would result in feature engineering at the customer level.

feature_matrix_customers, features_defs = ft.dfs(

dataframes=dataframes,

relationships=relationships,

target_dataframe_name="customers",

)

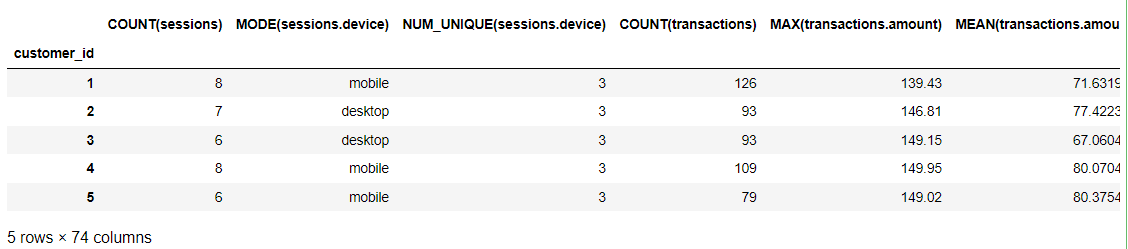

feature_matrix_customersAs we can see from the above picture, we have new features of the customer data, including various new features from the session and transaction table, such as the count and mean of certain features. With few lines, we have produced a lot of features.

Of course, not all the features would be helpful for machine learning modeling, but it’s the work for feature selection. In our case, feature engineering is only concerned with creating the feature.

If you need an explanation for each feature, we could use the following function.

feature = features_defs[10]

ft.describe_feature(feature)2. Feature-Engine

Feature-Engine is an open-source Python package for feature engineering and selection procedures. The package works as a transformer with similarity to scikit-learn functions such as fit and transform.

How valuable is Feature-Engine? It’s beneficial when you already have a machine learning pipeline in mind, primarily if you use scikit-learn-based APIs.

The Feature-Engine transformers were designed to work with the scikit-learn pipeline and interact similarly with the scikit-learn package.

There are many APIs to try in the Feature-Engine package, but for this article’s purpose, we would only focus on the Feature Engineering functions available. For the feature engineering, there are three APIs we could try:

MathFeatures: Combining features with mathematical functions

RelativeFeatures: Combining features with references

CyclicalFeatures: Create features using sine and cosine (suit for cyclical features)

Let’s try all the transformers to test the feature engineering process. For starters, I would use the example MPG dataset from Seaborn.

import seaborn as sns

df= sns.load_dataset('mpg')First, I want to try the MathFeatures function for the mathematical function of feature engineering. To do this, I would set the transformer with both the column and transformation we want to do.

from feature_engine.creation

import MathFeatures

transformer = MathFeatures(variables=["mpg", "cylinders"],

func = ["sum", "min", "max", "std"])After the setup, we could transform our original data using the transformer.

df_t = transformer.fit_transform(df)

df_tAs we can see above, there are new columns from our feature engineering process. The column name has been stated easily to understand what happened in the process. For note, we could always pass our function to the transformer function to do our calculation.

We can also try the RelativeFeatures function to create a transformer that uses a reference variable to develop features.

from feature_engine.creation import RelativeFeatures

transformer = RelativeFeatures(variables=["mpg", "weight"],reference=["mpg"],

func = ["sub", "div", "mod"])

df_r = transformer.fit_transform(df)

df_rAs we can see from the above result, the newly created columns were all based on the reference feature (‘mpg’); for example, weight subtracted by the mpg. This way, we can quickly develop features based on our desired features.

3. Tsfresh

Tsfresh is an open-source Python package for time-series and sequential data feature engineering. It allows us to create thousands of new features with few lines. Moreover, the package is compatible with the Scikit-Learn method, which enables us to incorporate it into the pipeline.

The feature engineering from Tsfresh is different because the extracted features can’t be used directly in the machine learning model training.

The features were used to describe the time series dataset, and additional steps were needed to include the data in the training model.

Let’s try the package with an example dataset. I would use the DJIA 30 stock data from Kaggle (License: CC0: Public Domain) for this sample.

To be specific, I would use all stock data from 2017 only. Let’s read the dataset.

import pandas as pd

df = pd.read_csv('all_stocks.csv')#cleaning the data by dropping nan values

df = df.dropna().reset_index(drop = True)

dfThe stock data contains the Date column as the time index and the Name column as the stock reference. The other columns contain the values we would like to describe using Tsfresh.

Let’s try out the package using the following code.

from tsfresh import extract_features

extracted_features = extract_features(df, column_id="Name", column_sort="Date")

extracted_featuresextracted_features.columnsAs we can see from the above result, the extracted features contain around 3945 new features. These features were all descriptions of the available features and ready to be used. If you want to know the description of each feature, you can read it all here.

We can also use feature selection functions to select only the relevant features. The feature selection is on the following page.

That’s all my tips for top feature engineering Python packages.

Are there any more things you would love to discuss? Let’s talk about it together!

👇👇👇