Utilizing Gorilla LLM to Boost Prompt Results for Greater API Access

Improve the LLM potential to provide APIs in your code

In recent years, Generative AI is become more prominent than ever. Especially with the introduction of ChatGPT, Conversational AI is now used in many activities, including programming.

The introduction of the Large Language Model (LLM) has changed how people perform programming work. In the past, we would try to generate, search, and debug our code by ourselves or with the help of a search engine.

However, LLM has changed the programming game. The models now help us fill in the system for the activity above. We can get a whole code set or full debugging with a single prompt within seconds.

I am not sure how many percentages of people are using LLM for coding purposes, but I am sure the number would steadily. Even Stackoverflow has announced its own generative AI.

The problem is that the LLM model isn’t always correct in providing the result. Recent research by Stanford has shown that for the GPT-4 model, the percentage of code that could be executable dropped from 52.0% in March 2023 to 10.0% in June 2023. It’s even less for GPT-3.5 (from 22.0% to 2%).

In a way to have a better performance in code generation, a Microsoft Research team developed a LLaMA-based model called Gorilla. It’s developed specifically to provide users with a better result of API calls as GPT-4 suffers in performance for the API call aspect.

Let’s explore it further.

Gorilla LLM

As I mentioned above, Gorilla LLM is developed specifically to mitigate the issues of other LLMs during API calls in their result. The inspiration is come from how other LLM models often produce a wrong code during the API generation prompt.

In the paper, Gorilla showed better API call results; as shown in the image above, GPT-4 and Claude suffer from Hallucinations and wrong facts.

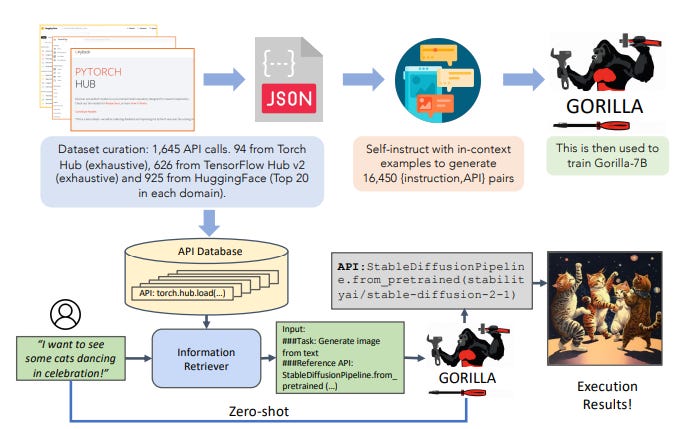

Using the LLaMA-7B-based model, the model is trained and evaluated on the dataset that the team constructed called APIBench, which contains ModelHub API from TorchHub, TensorHub and HuggingFace. Overall, Gorilla is developed similarly to the diagram below.

Code Implementation

We will use the Gorilla example tutorial to try out the LLM. In this sample, we would use the hosted model by UC Berkeley Lab. First, let’s set up all the code and environment for the model to work.

import openai

import urllib.parse

openai.api_key = "EMPTY"

openai.api_base = "http://zanino.millennium.berkeley.edu:8000/v1"

# Query Gorilla server

def get_gorilla_response(prompt="I would like to get a code to transform text to speech.", model="gorilla-7b-hf-v1"):

try:

completion = openai.ChatCompletion.create(

model=model,

messages=[{"role": "user", "content": prompt}]

)

return completion.choices[0].message.content

except Exception as e:

raise_issue(e, model, prompt)With all the preparation ready, let’s try out the model. In the model below, we would use Gorilla Version 1 with APIs choices of 925 Hugging Face APIs Zero-Shot (Gorilla-7b-hf-v1).

prompt = "I would like to translate 'Nice to meet you.' from English to Bahasa Indonesia."

print(get_gorilla_response(prompt, model="gorilla-7b-hf-v1"))Gorilla output is the code that uses HuggingFace API to execute our direction. In this case, we want to translate from English to Bahasa Indonesia.

Let’s try out another example. This time we would use the API call from TorchHub (gorilla-7b-th-v0).

prompt = "I want to build a robot that can detecting objects in an image ‘cat.jpeg’. Input: [‘cat.jpeg’]"

print(get_gorilla_response(prompt, model="gorilla-7b-th-v0"))The result is shown below.

{'domain': 'Object Detection', 'api_call': \"model = torch.hub.load('datvuthanh/hybridnets', 'hybridnets', pretrained=True)\", 'api_provider': 'PyTorch', 'explanation': \"Load the pretrained HybridNets model from PyTorch Hub for object detection, which is capable of detecting objects in an image like the cat.jpeg provided.\", 'code': 'import torch\nmodel = torch.hub.load('datvuthanh/hybridnets', 'hybridnets', pretrained=True)'}" Like the Gorilla HuggingFace model, the result is now trying to call out the API from TorchHub. You can try out the model yourself; it’s all open-source and easy to access.

The developer opens the Gorilla API store if you want to access or contribute to the API dataset.

Conclusion

LLM is often unreliable to produce directly executable code, especially if we want to have API calls. Gorilla LLM tries to mitigate the pain point by fine-tuning the LLaMa-Based model with the APIBench dataset. The model is specific for API call problems, so if you have any API-related problems, then this model might be perfect for you.

Thank you, everyone, for subscribing to my newsletter. If you have something you want me to write or discuss, please comment or directly message me through my social media!