Your AI Primer: Recent GPT-Based Researchs You Should Know

Various GPT research that would improve your arsenal

Artificial Intelligence, or AI, is the buzzword of the year. It seems like everyone, everywhere, is talking about it. Whether in real life or on social media, you will hear the term AI being thrown around. Much of this hype can be attributed to ChatGPT, which has transformed the landscape of generative AI.

ChatGPT was developed by OpenAI, the organization that introduced the Generative Pretrained Transformers (GPT) concept. GPT is a type of AI designed to generate human-like text from a given input. If we were to summarize the workings of GPT, it follows these procedures:

Pre-training: During this stage, the artificial neural network based on the transformer architecture is trained on a large amount of unlabeled text data. Unlabeled means it doesn't know specifics about which documents were in its training set or any information about specific documents or sources.

Fine-tuning: After pre-training, the model is fine-tuned on a narrower dataset, generated with the help of human reviewers who follow guidelines or tasks. They review and rate possible model outputs for a range of example inputs.

Nearly all recent large language models (LLMs) have adopted this procedure. Especially the fine-tuning part; because pre-training models can be costly, many opt to use a pre-trained model to develop their models for intended tasks further.

This article will explore some intriguing GPT-based models that can enhance our workflow. What are they? Let's dive in.

T2M-GPT

What if you can generate a human motion only with the text input? It’s undoubtedly the research that Zhang et al. (2023) try to do.

The T2M-GPT research attempts to merge a generative framework based on Vector Quantised Variational AutoEncoder (VQ-VAE) and Generative Pretrained Transformer (GPT). This combination produces visual motion derived from the text. You can see the result in the GIF below.

The research result is a human motion that can be applied in many use cases such as animation video, game development, and many others. If you want to try the model, use the following demo notebook or the HuggingFace space.

SpeechGPT

SpeechGPT is a research by Zhang et al. (2023) to create a large language model with intrinsic cross-modal conversational abilities. SpeechGPT is intended for the model to understand text and audio input and provide text and audio information.

The capability can be for a personal assistant, tutor, conversational trainer, etc. It’s research that might be useful to improve accessibility for LLM. The model demo can be seen on their project page or in the video below.

For further release of the model, you can follow the research on the following page.

StructGPT

StructGPT is research by Jiang et al. (2023) to improve the large language models (LLMs) on understanding structured data. Most of the research on LLM is all about text generation, which doesn’t involve structured data. With StructGPT, the author wants to utilize LLM's capability to understand structured data.

Instead of creating a model, the team has developed StructGPT as a fresh approach named Iterative Reading-then-Reasoning (IRR) for tackling tasks that rely on structured data. To put it briefly, this method employs specific functions to gather data from structured sources, allowing the Large Language Model (LLM) to execute tasks based on the collected data and the posed question. You can see the overall structure of StructGPT in the image below.

You can visit their page to follow the research and replicate the experiment.

DreamGPT

Many people point out that LLMs aren't always accurate because they don't understand the natural world and can make stuff up. Some of the results feel like a hallucination created out of nothing. This can be a problem, like when you're searching for something or reading a news story and the model gets it wrong, but it's so sure it's right that nobody notices the mistake.

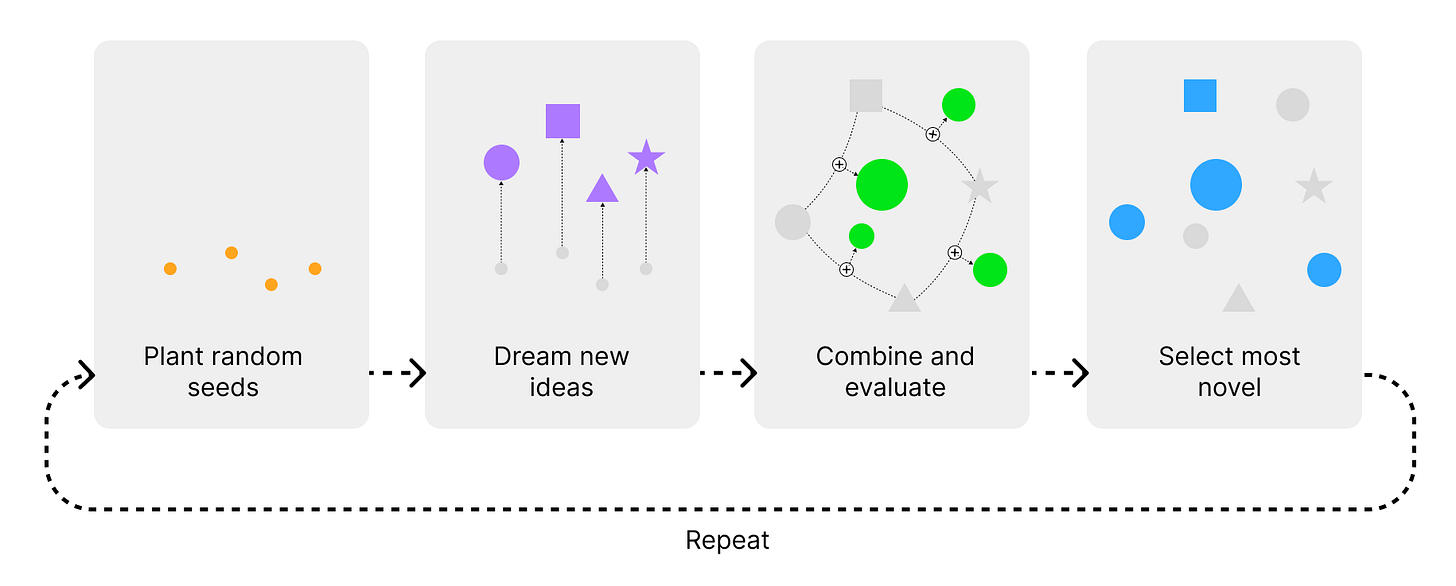

Introducing DreamGPT, an open-source project by DivergentAI to use the LLM hallucinations to develop new fresh ideas. This is the aim of DreamGPT to venture into as many potential avenues as it can rather than focus on solving specific tasks. DreamGPT works can be summarized in the image below.

DreamGPT's operation is straightforward. Initially, it picks randomly from a collection of pre-established ideas, like individual words including 'metal', 'hat', 'song', etc. Subsequently, it generates ideas from these concepts, providing each with a title and summary.

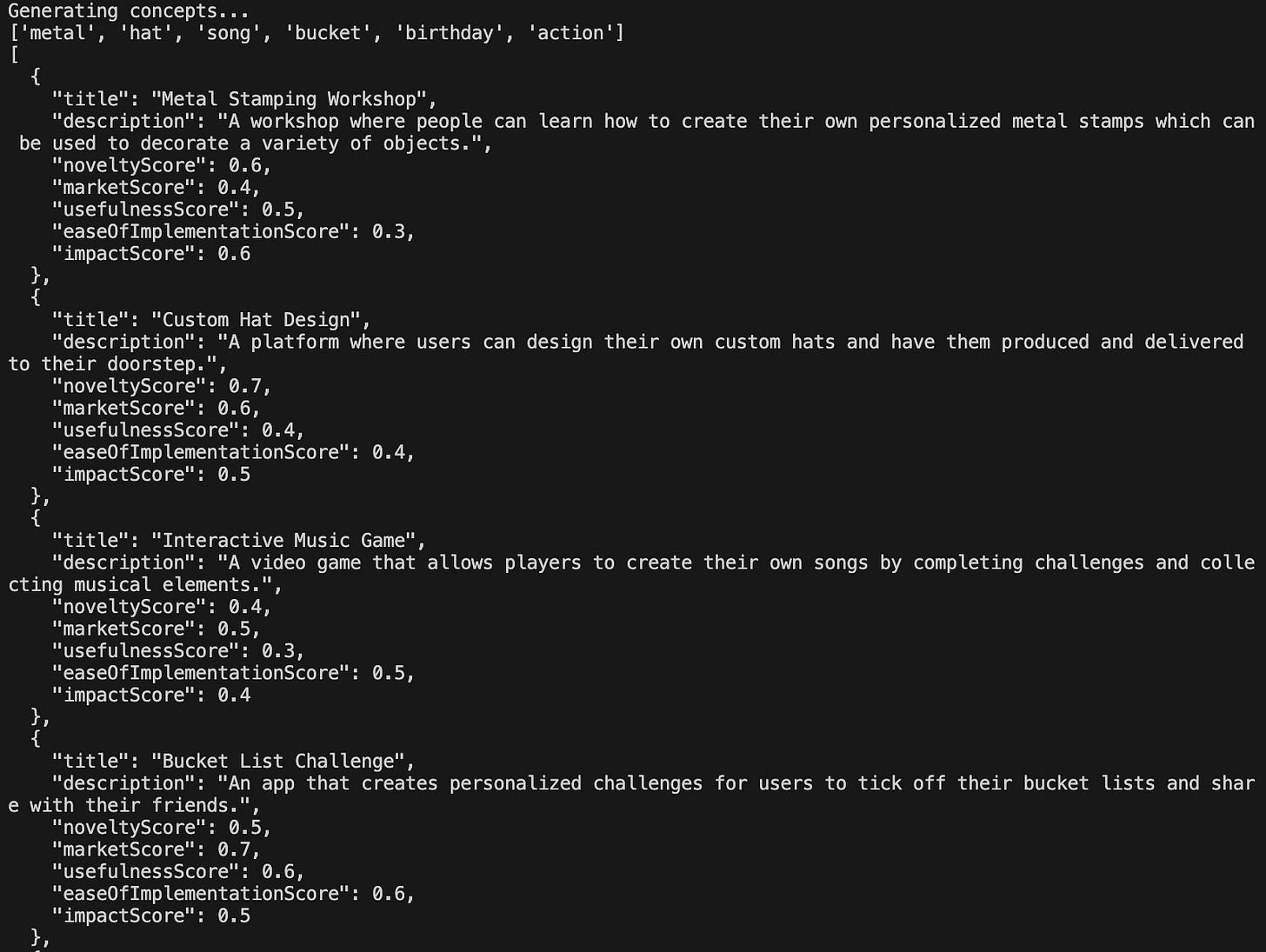

DreamGPT then appraises these derived ideas based on criteria such as novelty or marketable. DreamGPT then combines the score and selects the most creative versions as the seed for fresh ideas. The example result can be seen in the image below.

The result above shows various scores that show how helpful the idea is. The score definition is as described below.

noveltyScore: How unique this concept was from the other ideas.

marketScore: How potential the idea to the market.

usefulnessScore: How potential benefit if we use this idea.

easeOfImplementationScore: How easy to make the idea into reality.

impactScore: How positive is the potential impact of this idea to the world.

Conclusion

GPT-Based research has grown significantly recently, leading to the development of many new models and approaches. In this newsletter, we've discussed several studies that may pique your interest, including:

T2M-GPT

SpeechGPT

StructGPT

DreamGPT

I hope it helps.

Don’t forget to comment if you find this post helpful, or share what you want me to write next. Also, follow me on Social Media: Linkedin and Twitter.